Science has always relied on a combination of approaches to derive an answer or develop a theory. The seeds for Darwin’s theory of natural selection grew under a Herculean aggregation of observation, data and experiment. The more recent confirmation of gravitational waves by the Laser Interferometer Gravitational-Wave Observatory (LIGO) was a decades-long interplay of theory, experiment and computation.

Certainly, this idea was not lost on the U.S. Department of Energy’s (DOE) Argonne National Laboratory, which has helped advance the boundaries of high-performance computing technologies through the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science User Facility.

Realizing the promise of exascale computing, the ALCF is developing a framework to enable an advanced combination of simulation, data analysis and machine learning. This effort will undoubtedly reframe the way science is conducted and do so on a global scale.

Since the ALCF was established in 2004, the methods used to collect, analyze and employ data have changed dramatically. Where data were once the product of and limited by physical observation and experiment, advances in feeds from scientific instrumentation such as beamlines, colliders and space telescopes — just to name a few — have increased data output substantially, giving way to new terminologies, such as “big data.”

While the scientific method remains intact and the human instinct to ask big questions still drives research, the way we respond to this new windfall of information requires a fundamental shift in how we use emerging computing technologies for analysis and discovery.

This convergence of simulation, data and learning is driving an ever-more complex, but logical, feedback loop.

Increased computational capability supports larger scientific simulations that generate massive datasets used to feed a machine learning process, the output of which informs further and more precise simulation. This, too, is then augmented by data from observations, experiments, etc., to refine the process using data-driven approaches.

“While we have always had this tradition of running simulations, we’ve been working incrementally for more than a few years now to robustly integrate data and learning,” says Michael Papka, ALCF director, deputy associate laboratory director for Computing, Environment and Life Sciences (CELS) and Northern Illinois University professor.

To advance that objective, the facility launched its ALCF Data Science Program (ADSP) in 2016 to explore and improve computational methods that could better enable data-driven discoveries across scientific disciplines.

Early in 2018, the CELS directorate announced the creation of the Computational Science (CPS) and Data Science and Learning (DSL) divisions to explore challenging scientific problems through advanced modeling and simulation, data analysis and other artificial intelligence methods. Those divisions are now fully integrated into CELS and provide increased senior leadership among four computing divisions, helping to extend a single Argonne computing effort, while at the same time pushing their respective computing areas forward.

“The new divisions have allowed for increased focus in each particular area and, even more importantly, over the last two years we have seen increased engagement with other areas of the Lab, as exemplified by the creation of interdivisional appointments between CPS and divisions focusing on energy sciences, chemistry, materials science and nuclear and high energy physics,” says CPS director Salman Habib.

Already, this combination of programs and entities is being tested and proved through studies that cross the scientific spectrum, from understanding the origins of the universe to deciphering the neural connectivity of the brain.

Convergence for a brighter future

Data has always been a key driver in science and yes, it’s true that there is an exponentially larger amount than there was, say, 10 years ago. Despite the fact that the catchphrase “big data” gets thrown about a lot these days, data has always played an important role in research. But the size and complexity of the data now available poses challenges, as well as providing opportunities for new insights.

No doubt Darwin’s research was big data for its time, but it was the culmination of nearly 30 years of painstaking collection and analysis. He might have whittled the process considerably had he had access to high-performance computers and data analysis and machine learning techniques, such as data mining.

“These techniques don’t fundamentally change the scientific method, but they do change the scale or the velocity or the kind of complexity you can deal with,” notes Rick Stevens, CELS associate laboratory director and University of Chicago professor.

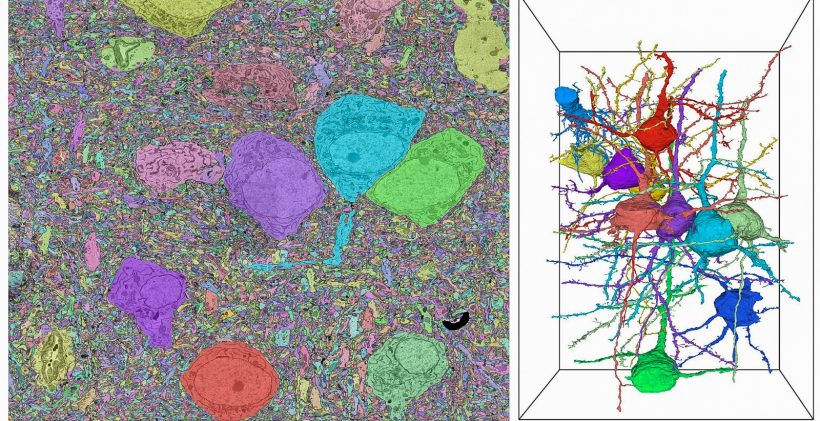

Take, for example, the development of a brain connectome, what scientists, both computational and neuro, hope will be an accurate roadmap of every connection between neurons — identifying cell types, and the location of dendrites, axons and synapses — basically, the communications or signaling pathways of a brain.

It is the kind of research that was all but impossible until the advancement of ultra-high-resolution imaging techniques and path-to-exascale computational resources, allowing for finer resolution of microscopic, diaphanous anatomy and the ability to wrangle the sheer size of the data, respectively.

A whole mouse brain, which is only about a centimeter cubed, could generate an exabyte — or a billion billion bytes — of data at a reasonable resolution, notes Nicola Ferrier, a senior computer scientist in Argonne’s Mathematics and Computer Science division.

With the computing power to handle that scale, she and her team are working on smaller samples, some a millimeter cubed, which can still generate a petabyte of data.

Working primarily with mice brain samples, Ferrier and her team are developing a computational pipeline to analyze the data obtained from a complicated process of slicing and imaging. Their research is being carried out through the ALCF’s Aurora Early Science Program, which supports teams working to prepare codes for the architecture and scale of the forthcoming exascale supercomputer, Aurora.

The process begins with massive-data-producing electron microscopy images of the brain samples that have been sliced. The images of the slices are stitched, then reassembled to create a 3D volume, which is itself segmented, to figure out where the neurons and synapses are located.

“The problem of executing a brain connectome is an exascale problem … With the ability to handle such massive amounts of data, we will be able to answer questions like what happens when we learn, what happens when you have a degenerative disease, how does the brain age?” — Nicola Ferrier, Argonne senior computer scientist

The segmentation step relies on an artificial intelligence technique called a convolutional neural network; in this case, a flood-filling network developed by Google for the reconstruction of neural circuits from electron microscopy images of the brain. While it has demonstrated better performance than past approaches, the technique also comes with a higher computational cost.

“We have scaled that process and we’ve scaled thousands of nodes on the ALCF’s Theta supercomputer,” says Ferrier. “When you go to do the segmentation on that large volume, you have to distribute the data on the computer. And after you’ve run your inference on the data, you have to put it back together. Finally, it all has to be analyzed.

“So, the problem of executing a brain connectome is an exascale problem,” she adds. Without the power of an Aurora, the tasks could not be accomplished. With the ability to handle such massive amounts of data, they will be able to answer questions like what happens when we learn, what happens when you have a degenerative disease, how does the brain age?

Questions for which we have been seeking answers for millennia.

One machine to bind them all

Whether it’s the quest to develop a connectome or understand key flow physics to develop more efficient wind turbine blades, the merging and flourishing of data and artificial intelligence techniques and advanced computational resources is only possible because of the exponential and deliberate development of high-performance computing and data delivery systems.

“Supercomputer architectures are being structured to make them more amenable to dealing with large amounts of data and facilitating learning, in addition to traditional simulations,” says Venkat Vishwanath, ALCF data sciences lead. “And we are fitting these machines with massive conduits that allow us to stream large amounts of data from the outside world, like the Large Hadron Collider at CERN and our own Advanced Photon Source (APS), and enable data-driven models.”

Many current architectures still require the transfer of data from computer to computer, from one machine, the sole function of which is simulation, to another that excels in data analysis and/or machine learning.

Within the last few years, Argonne and the ALCF have made a solid investment in high-performance computing that gets them closer to a fully integrated machine. The process accelerated in 2017, with the introduction of the Cray XC40 system, Theta, which is capable of combining traditional simulation runs and machine learning techniques.

In 2020, with the benefit of the Coronavirus Aid, Relief and Economic Security (CARES) Act funding, Theta was augmented with 24 NVIDIA DGX A100 nodes, increasing the performance of the system by more than 6 petaflops and bringing GPU-enabled acceleration to the Theta workloads.

Arriving in 2021 will be ALCF’s newest machine, Polaris — a CPU/GPU hybrid resource that provides the opportunity for new and existing users to continue to prepare and scale their codes, and ultimately their science, on a resource that will look very much like future exascale systems. Polaris will provide substantial new compute capabilities for the facility and greatly expand its support for data and learning workloads. The system will be fully integrated with the 200-petabyte file system ALCF deployed in 2020, with increased data sharing support.

The ALCF will further drive simulation, data and learning to a new level in the near future, when they unveil one of the nation’s first exascale machines, Aurora. While it can perform a billion billion calculations per second, its main advantage may be its ability to conduct and converge simulation, data analysis and machine learning under one hood. The end result will allow researchers to approach new types of, as well as much larger, problems and reduce time to solution.

“Aurora will change the game,” says the ALCF’s Papka. “We’re working with vendors Intel and HPE to assure that we can support science through this confluence of simulation, data and learning all on day one of Aurora’s deployment.”

Whether by Darwin or Turing, whether with chalkboard or graph paper, some of the world’s great scientific innovations were the product of one or several determined individuals who well understood the weight of applying balanced and varied approaches to support — or refute — a hypothesis.

Because current innovation is driven by collaboration among colleagues and between disciplines, the potential for discovery through the pragmatic application of new computational resources, coupled with unrestrained data flow, staggers the imagination.

The ALCF and APS are DOE Office of Science User Facilities.

The Argonne Leadership Computing Facility provides supercomputing capabilities to the scientific and engineering community to advance fundamental discovery and understanding in a broad range of disciplines. Supported by the U.S. Department of Energy’s (DOE’s) Office of Science, Advanced Scientific Computing Research (ASCR) program, the ALCF is one of two DOE Leadership Computing Facilities in the nation dedicated to open science.

About the Advanced Photon SourceThe U. S. Department of Energy Office of Science’s Advanced Photon Source (APS) at Argonne National Laboratory is one of the world’s most productive X-ray light source facilities. The APS provides high-brightness X-ray beams to a diverse community of researchers in materials science, chemistry, condensed matter physics, the life and environmental sciences, and applied research. These X-rays are ideally suited for explorations of materials and biological structures; elemental distribution; chemical, magnetic, electronic states; and a wide range of technologically important engineering systems from batteries to fuel injector sprays, all of which are the foundations of our nation’s economic, technological, and physical well-being. Each year, more than 5,000 researchers use the APS to produce over 2,000 publications detailing impactful discoveries, and solve more vital biological protein structures than users of any other X-ray light source research facility. APS scientists and engineers innovate technology that is at the heart of advancing accelerator and light-source operations. This includes the insertion devices that produce extreme-brightness X-rays prized by researchers, lenses that focus the X-rays down to a few nanometers, instrumentation that maximizes the way the X-rays interact with samples being studied, and software that gathers and manages the massive quantity of data resulting from discovery research at the APS.This research used resources of the Advanced Photon Source, a U.S. DOE Office of Science User Facility operated for the DOE Office of Science by Argonne National Laboratory under Contract No. DE-AC02-06CH11357.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation’s first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America’s scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy’s Office of Science.

The U.S. Department of Energy’s Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science.