From mapping the human brain to accelerating the discovery of new materials, the power of exascale computing promises to advance the frontiers of some of the world’s most ambitious scientific endeavors.

But applying the immense processing power of the U.S. Department of Energy’s (DOE) upcoming exascale systems to such problems is no trivial task. Researchers from across the high-performance computing (HPC) community are working to develop software tools, codes, and methods that can fully exploit the innovative accelerator-based supercomputers scheduled to arrive at DOE national laboratories starting in 2021.

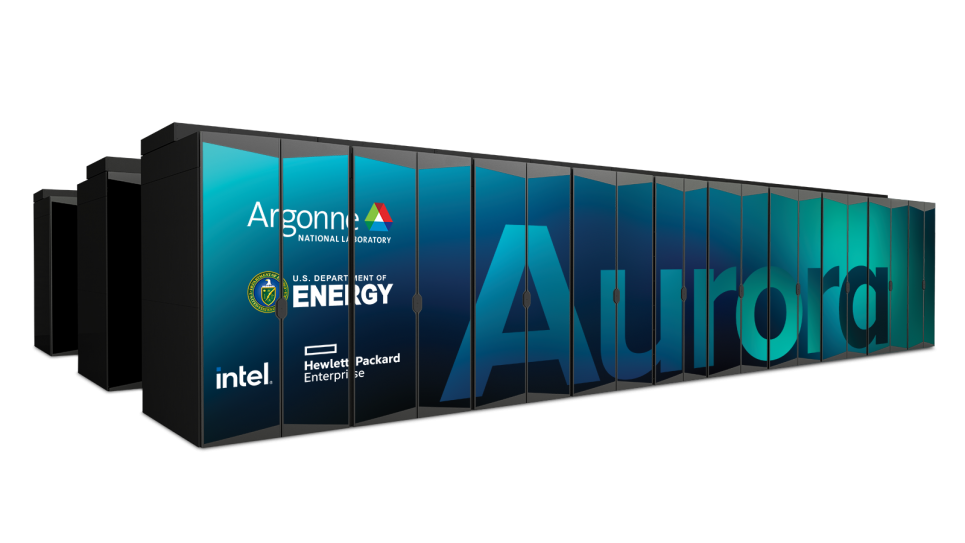

As the future home to the Aurora system being developed by Intel and Hewlett Packard Enterprise (HPE), DOE’s Argonne National Laboratory has been ramping up efforts to ready the supercomputer and its future users for science in the exascale era. Argonne researchers are engaged in a broad range of preparatory activities, including exascale code development, technology evaluations, user training, and collaborations with vendors, fellow national laboratories, and DOE’s Exascale Computing Project (ECP).

“Science on day one is our goal when it comes to standing up new supercomputers,” said Michael Papka, Director of the Argonne Leadership Computing Facility (ALCF), a DOE Office of Science User Facility. “It’s always challenging to prepare for a bleeding-edge machine that does not yet exist, but our team is leveraging all available tools and resources to make sure the research community can use Aurora effectively as soon as it’s deployed for science.”

The process of planning and preparing for new leadership-class supercomputers takes years of collaboration and coordination. Susan Coghlan, ALCF Project Director for Aurora, has played a key role in the development and delivery of all ALCF production systems dating back to the IBM Blue Gene/L supercomputer that debuted in 2005.

“Our team has years of experience fielding these extreme-scale systems. It requires deep collaboration with vendors on the development of hardware, software, and storage technologies, as well as facility enhancements to ensure we have the infrastructure in place to power and cool these massive supercomputers,” said Coghlan, who also serves as the ECP’s Deputy Director of Hardware and Integration. “With Aurora, we have the added layer of collaborating with ECP, which has allowed us to work with a broader science portfolio, a broader software portfolio and closer partnerships with the other labs than we have had in the past.”

The ECP is a key partner in the efforts to prepare for DOE’s first exascale supercomputers: Argonne’s Aurora system, Oak Ridge National Laboratory’s Frontier system, and Lawrence Livermore National Laboratory’s El Capitan system. Launched in 2016, the multi-lab initiative is laying the groundwork for the deployment of these machines by building an ecosystem that encompasses applications, system software, hardware technologies, and workforce development. Researchers from across Argonne—one of the six ECP core labs—are helping the project to deliver on its goals.

The ALCF team has been partnering with the ECP on several efforts. These include developing a common continuous integration strategy to create an environment that enables regular software testing across DOE’s exascale facilities; using the Spack package manager as a tool for build automation and final deployment of software; exploring and potentially enhancing the installation, upgrade and monitoring capabilities of HPE’s Shasta software stack; and working to enable container support and tools on Aurora and other exascale systems.

Working in concert with the ECP, Argonne researchers are also contributing to the advancement of programming models (OpenMP, SYCL, Kokkos, Raja), language standards ( C++), and compilers (Clang/LLVM) that are critical to developing efficient and portable exascale applications. Furthermore, the ALCF continues to work closely with Intel and HPE on the testing and development of various components to ensure that the scientific computing community can leverage them effectively.

“By analyzing the performance of key benchmarks and applications on early hardware, we are developing a broad understanding of the system’s architecture and capabilities,” said Kaylan Kumaran, ALCF Director of Technology. “This effort helps us to identify best practices for optimizing codes and, ultimately, create a roadmap for future users to adapt and tune their software for the new system.”

Another critical piece of the puzzle is the Aurora Early Science Program (ESP), an ALCF program focused on preparing key applications for the scale and architecture of the exascale machine. Through open calls for proposals, the ESP awarded pre-production computing time and resources to a diverse set of projects that are employing emerging data science and machine learning approaches alongside traditional modeling and simulation-based research. The research teams also field-test compilers and other software, helping to pave the way for more applications to run on the system.

“In addition to fostering application readiness, the Early Science Program allows researchers to pursue ambitious computational science projects that aren’t possible on today’s most powerful systems,” said Katherine Riley, ALCF Director of Science. “We are supporting projects that are gearing up to use Aurora for extreme-scale cosmological simulations, AI-driven fusion energy research, and data-intensive cancer studies, to name a few.”

The ESP projects are investigating a wide range of computational research areas that will help prepare Aurora for prime time. These include mapping and optimizing complex workflows; exploring new machine learning methodologies; stress testing I/O hardware and other exascale technologies; and enabling connections to large-scale experimental data sources, such as CERN’s Large Hadron Collider, for analysis and guidance.

With access to the early Aurora software development kit (a frequently updated version of the publicly available oneAPI toolkit) and Intel Iris (Gen9) GPUs through Argonne’s Joint Laboratory for System Evaluation, researchers participating in the ESP and ECP are able to test code performance and functionality using the programming models that will be supported on Aurora.

A variety of training events hosted by the DOE computing facilities, ECP and system vendors provide application teams with opportunities to receive hands-on assistance in using the exascale tools and technologies that are available for testing and development work.

The Argonne-Intel Center of Excellence (COE) has held multiple workshops to provide details and instruction on various aspects of the Aurora hardware and software environment. Open to ECP and ESP project teams, these events include substantial hands-on time for attendees to work with Argonne and Intel experts on developing, testing and profiling their codes, as well as deep dives into topics like the Aurora software development kit, the system’s memory model, and machine learning tools and frameworks. Participants also have the opportunity to share programming progress, best practices, and lessons learned. In addition, the COE continues to host virtual hackathons with individual ESP teams. These multi-day collaborative events connect researchers with Argonne and Intel staff members for marathon hands-on sessions dedicated to accelerating their application development efforts.

To provide exascale training opportunities to the broader HPC community, the ALCF launched its new Aurora Early Adopter webinar series earlier this year. Open to the public, the webinars have covered Aurora’s DAOS I/O platform, oneAPI’s OpenMP offload capabilities, and the oneAPI Math Kernel Library (oneMKL).

Together, these activities and collaborations are preparing the scientific community to harness exascale computing power to drive a new era of scientific discoveries and technological innovations.

“All of our efforts to prepare for Aurora are central to enabling science on day one,” Papka said. “We have all hands on deck to ensure the system is ready for scientists, and scientists are ready for the system.”

The Argonne Leadership Computing Facility provides supercomputing capabilities to the scientific and engineering community to advance fundamental discovery and understanding in a broad range of disciplines. Supported by the U.S. Department of Energy’s (DOE’s) Office of Science, Advanced Scientific Computing Research (ASCR) program, the ALCF is one of two DOE Leadership Computing Facilities in the nation dedicated to open science.

Argonne National Laboratory seeks solutions to pressing national problems in science and technology. The nation’s first national laboratory, Argonne conducts leading-edge basic and applied scientific research in virtually every scientific discipline. Argonne researchers work closely with researchers from hundreds of companies, universities, and federal, state and municipal agencies to help them solve their specific problems, advance America’s scientific leadership and prepare the nation for a better future. With employees from more than 60 nations, Argonne is managed by UChicago Argonne, LLC for the U.S. Department of Energy’s Office of Science.

The U.S. Department of Energy’s Office of Science is the single largest supporter of basic research in the physical sciences in the United States and is working to address some of the most pressing challenges of our time. For more information, visit https://energy.gov/science